Exploring the Ethical Implications of AI-Based Digital Assistants

21 September 2025

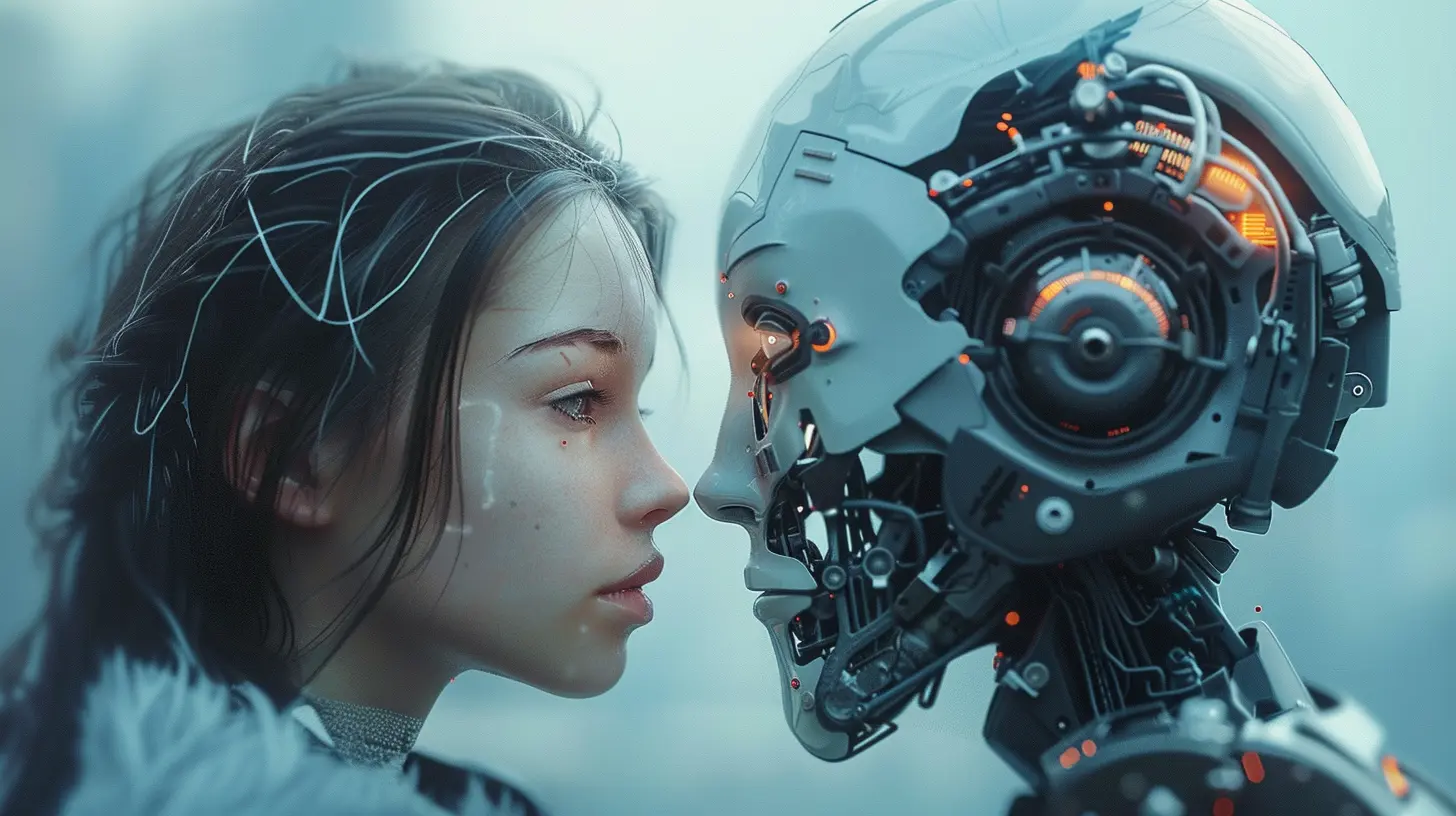

Artificial Intelligence has leaped out of science fiction and into our daily lives. One of the most common forms? Digital assistants. Whether it’s Siri playing music on your iPhone, Alexa dimming the lights, or Google Assistant managing your calendar — these AI-based helpers are everywhere. They’re making life easier, more connected, and, well, a little more futuristic.

But here’s the thing: as helpful as digital assistants are, they raise some serious ethical questions. And I mean, really serious ones.

Let’s dig into the ethical maze surrounding AI-based digital assistants — what’s at stake, what’s working, and what still needs improvement.

What Are AI-Based Digital Assistants, Really?

First off, let’s break it down. An AI-based digital assistant is basically a software agent powered by artificial intelligence. It uses natural language processing (NLP), machine learning, and big data to interact with users, understand commands, and perform tasks.We’re talking about tools like:

- Amazon Alexa

- Apple Siri

- Google Assistant

- Microsoft Cortana (RIP, kind of)

- Samsung Bixby (yes, it’s still around)

These assistants are designed to learn from your behavior, anticipate needs, and respond like a human would — or at least try to. That’s both cool… and potentially creepy.

The Convenience vs. Conscience Dilemma

Let’s face it — digital assistants are crazy convenient. You say “Hey Google, what’s the weather?” and boom, you’re good to go. No typing, no scrolling. But convenience doesn’t come free. The toll? Your data, your privacy, and sometimes even your autonomy.So here’s the million-dollar question:

At what point does convenience outweigh ethical responsibility?

1. Privacy: Is Your Assistant Always Listening?

Let’s get real — your AI assistant is always kinda listening. That wake word (like “Hey Siri”) activates it, but the device is on standby, always processing some level of audio.Now, companies promise they’re not recording or storing everything. But there have been multiple cases where snippets of private conversations were captured, stored, and even reviewed by human employees. Yikes, right?

Imagine having a nosy roommate who doesn’t talk much, but is always hanging around and taking notes. That’s kinda what we’re dealing with.

Ethical Concern:

Recording without consent goes against basic privacy rights. Users should have clear knowledge of what is being collected, how it’s stored, and what it’s used for.Potential Fix:

Greater transparency + giving users more control over their data (like opt-in features and easy-to-use privacy dashboards) would help a lot.

2. Consent and User Agreement: Did You Really Read That TOS?

Be honest — have you ever read every word of a Terms of Service agreement?Yeah, didn’t think so. You’re not alone.

Digital assistants often hide crucial data collection info in these documents. So technically, you “consented," but did you really know what you were agreeing to?

Ethical Concern:

Informed consent is the cornerstone of ethical AI. If users don’t truly understand what they’re agreeing to, the entire idea of “consent” becomes a joke.Potential Fix:

Simplified, jargon-free explanations about privacy policies and regular reminders that update users on changes would make a big difference.3. Bias and Discrimination: Is Your Assistant Fair?

Here’s something most folks don’t think about: digital assistants can be biased. Not on purpose (they’re not evil robots), but because they learn from data — and data reflects human behavior. And humans? Well, we’re far from perfect.Studies have shown digital assistants might respond better to certain accents, genders, or speech patterns. They may struggle with non-native English speakers or misinterpret commands from people with speech impairments.

Ethical Concern:

AI should serve everyone equally. If certain groups are unfairly served or ignored, that signals systemic bias — and that’s a huge problem.Potential Fix:

Training AI on diverse, inclusive datasets and routine audits by diverse teams can reduce bias and create a more level playing field.4. Emotional Manipulation: Are They Getting Too Smart?

Digital assistants are getting better at recognizing emotion. Some are even trained to adjust their tone based on your mood. Friendly, sure, but also a little unsettling.What happens when your assistant encourages you to buy something because it “knows” you’re feeling low? Or nudges your behavior in subtle ways you barely notice?

Ethical Concern:

Using emotion to manipulate users crosses an ethical boundary. It could be compared to emotional blackmail — subtle but dangerous.Potential Fix:

Strict ethical boundaries must be drawn around AI-powered persuasion. Transparency about emotional tracking and clear opt-out features should be mandatory.5. Dependency: Are We Losing Our Own Autonomy?

Let’s be honest. Many of us rely on digital assistants to make day-to-day decisions. From setting reminders to answering basic questions, they’re becoming our go-to for everything. But could that make us… dumber?Think about it. If the assistant does all the thinking, remembering, and analyzing — what happens to our critical thinking? Our memory? Our independence?

Ethical Concern:

Overdependence on digital assistants can reduce human agency and cognitive abilities, leading to a passive, tech-reliant society.Potential Fix:

AI should be designed to complement human decision-making — not replace it. Think of digital assistants as co-pilots rather than autopilots.6. Surveillance: Are We Being Watched?

Let’s not ignore the elephant in the room — surveillance. Governments and corporations have the potential to use digital assistants for monitoring and tracking behaviors.Sure, it's not like Alexa is working for the FBI. But sensitive data stored or accessed by these assistants can be subpoenaed or hacked. That’s… terrifying.

Ethical Concern:

Mass surveillance violates basic human rights. Without strict regulations, digital assistants can easily become surveillance tools.Potential Fix:

Implementing robust data encryption, strict access control, and laws that limit surveillance use of assistant-collected data is crucial.7. Children and Vulnerable Users: Who Protects Them?

Kids love talking to Alexa. It’s like a toy that talks back. But young users are more susceptible to persuasive nudges and less aware of privacy implications.There’s also concern for elderly individuals who might rely on AI for help with reminders or medication, not realizing the extent of data being collected.

Ethical Concern:

Vulnerable groups need added protection against manipulation, data collection, and dependence.Potential Fix:

Stronger parental controls, age-appropriate interaction design, and ethical standards for serving vulnerable users are essential.The Corporate Dilemma: Profits vs. People

AI development and deployment is big business. And where there’s money, there’s motive. Tech companies make billions from advertising, user data, and device ecosystems.So, are they really incentivized to do what’s ethically right over what’s profitable? That’s a complicated question with no easy answers.

Real Talk:

If profit comes at the cost of user trust and wellbeing, companies need to answer for it — not just with words, but with action.Regulation and Responsibility: Who's in Charge Here?

Right now, there’s no global AI ethics cop. A patchwork of laws, recommendations, and guidelines exists — but nothing universal or binding.Should governments step in harder? Should tech companies self-regulate? Is there a middle ground?

Here’s a thought:

Maybe what we need isn’t just regulation — but a cultural shift in how we think about AI. Responsibility shouldn’t be optional. It should be baked into the system from day one.So, What’s the Ethical Path Forward?

Digital assistants aren’t going away. In fact, they’re evolving fast — into home automation hubs, healthcare assistants, and even emotional companions.So, rather than ditching them, here’s what we (as a society) should focus on:

1. Creating Transparent Tech

Let users see what data is collected, why it's used, and how it's stored. No shady backdoors.2. Building for Everyone

Make sure AI assistants work for people of all backgrounds, languages, and abilities.3. Prioritizing Consent

Real consent — not hidden behind 20 pages of legal jargon.4. Saying No to Manipulation

Draw a clear line between helpful nudges and emotional exploitation.5. Supporting Healthy Dependency

Encourage balanced usage and critical thinking, especially in young and older users.Final Thoughts: A Mirror, Not a Monster

At the end of the day, AI-based digital assistants are a reflection of us — our values, our intentions, and our biases. They’re not monsters. But if we’re not careful, they can become tools that widen inequality, breach privacy, and strip away autonomy.Think of AI ethics like the GPS of tech development. Without it, we might still move forward — but we’ll probably get lost along the way.

Let’s make sure we’re heading in the right direction.

all images in this post were generated using AI tools

Category:

Digital AssistantsAuthor:

Adeline Taylor

Discussion

rate this article

1 comments

Rory McCabe

This article brilliantly highlights the ethical considerations surrounding AI-based digital assistants. It raises essential questions about privacy, consent, and accountability, urging developers and users alike to prioritize responsible AI practices for a better future.

October 9, 2025 at 3:11 AM

Adeline Taylor

Thank you for your insightful comment! I'm glad you found the article's focus on ethics, privacy, and accountability important for shaping responsible AI practices.